Deep neural networks (DNNs) have shown immense promise in analyzing large datasets, revolutionizing research across various scientific domains. Computer scientists have successfully trained DNN models to analyze chemical data and identify potential applications in recent years. Inspired by the groundbreaking paper “Scaling Laws for Neural Language Models” by Kaplan et al., a team of researchers at the Massachusetts Institute of Technology (MIT) embarked on a study to investigate the neural scaling behavior of large DNN-based models used in generating chemical compositions and learning interatomic potentials. The results of their research were published in Nature Machine Intelligence, highlighting the substantial performance improvements achieved by scaling up these models with increased size and training data.

Contrary to popular belief, Nathan Frey and his colleagues initiated their study in 2021, predating the release of prominent AI platforms like ChatGPT and Dall-E 2. At that time, the scalability of DNNs was particularly pertinent and studies investigating their scaling in physical or life sciences were few. The researchers focused on two distinct types of models for chemical data analysis: a large language model (LLM) and a graph neural network (GNN)-based model. These models functioned differently, with the LLM being capable of generating chemical compositions, while the GNN learned the potentials between different atoms in chemical substances.

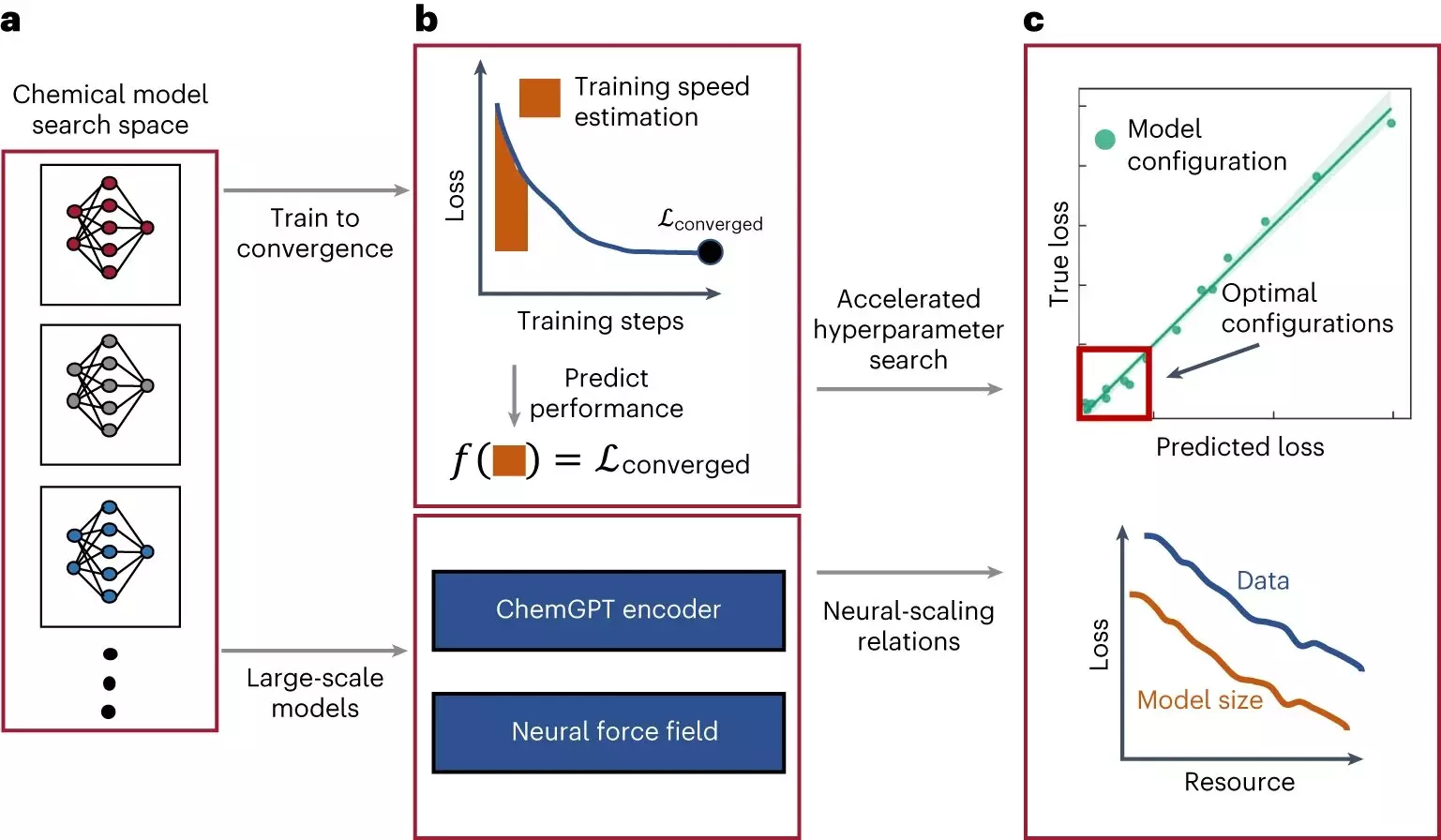

To understand the scalability of ChemGPT and GNNs, Frey and his team thoroughly examined the impact of model size and training dataset size on relevant metrics. This analysis allowed them to determine the rate at which these models improved as they scaled up with more data. ChemGPT, similar to ChatGPT, was trained to predict the next token in a molecule representation string. On the other hand, GNNs were trained to predict the energy and forces within a molecule. Through their exploration of these models, the researchers discovered neural scaling behavior in chemical models, akin to what has been observed in LLM and vision models in various applications.

The study demonstrated that chemical models have not reached any fundamental limits in terms of scaling. This finding indicates tremendous potential for further investigation by utilizing additional computational resources and larger datasets. Notably, the incorporation of physics into GNNs through a concept called “equivariance” drastically improved scaling efficiency. This exciting outcome presents a significant breakthrough, as algorithms that can alter scaling behavior are challenging to develop. With these findings, researchers now have a clearer understanding of the capabilities of AI models in chemistry research, showcasing the extent to which performance can be enhanced by scaling up. This valuable knowledge can inform future studies and facilitate the improvement of not only these models but also other DNN-based techniques tailored for specific scientific applications.

The use of deep neural networks for chemical data analysis holds immense promise in advancing scientific research. By leveraging the scalability of these models, researchers can analyze and interpret large datasets with remarkable efficiency. The improved performance achieved through scaling up opens up new avenues for discovering potential chemical compositions and developing novel applications in drug discovery, among other fields. The ability to predict the energy and forces within molecules enhances our understanding of chemical interactions and enables accelerated development in various scientific domains.

While this study has shed light on the benefits of scaling up deep neural networks for chemical data analysis, there is still much to explore. The researchers emphasize the need for more computational resources and expanded datasets to fully uncover the potential of these models and push the boundaries of their scalability. By incorporating additional physics-inspired techniques into graph neural networks, further improvements in scaling efficiency can be achieved. Continued research and innovation in this field will contribute to advancements in chemical research, fueling breakthroughs and driving transformative discoveries.

The research conducted by Frey and his team provides valuable insights into scaling up deep neural networks for chemical data analysis. By drawing inspiration from the scalability observed in neural language models, they have demonstrated the potential for significant performance improvements when these models are scaled up with larger sizes and datasets. The findings of this study pave the way for future research and development, unlocking new possibilities in chemistry research and other scientific applications.

Leave a Reply