Artificial intelligence (AI) is increasingly at the forefront of technological innovation, yet its effectiveness can be heavily hampered by inherent limitations within traditional computing architectures. One significant bottleneck that has plagued AI development is known as the von Neumann bottleneck. This phenomenon occurs when the processing speed of a CPU cannot keep pace with the speed at which data is read from the memory unit. In an age where datasets are expanding exponentially, this bottleneck puts a cap on the performance and efficiency of AI systems.

Researchers at Peking University, led by Professor Sun Zhong, recognized the potential to address this severe limitation. Their study, recently published in the journal Device, introduces a groundbreaking dual in-memory computing (dual-IMC) scheme that has the potential to revolutionize the way machine learning algorithms function. The dual-IMC approach aims to not only boost the speed of computations but also enhance energy efficiency, thereby creating a more sustainable framework for AI applications.

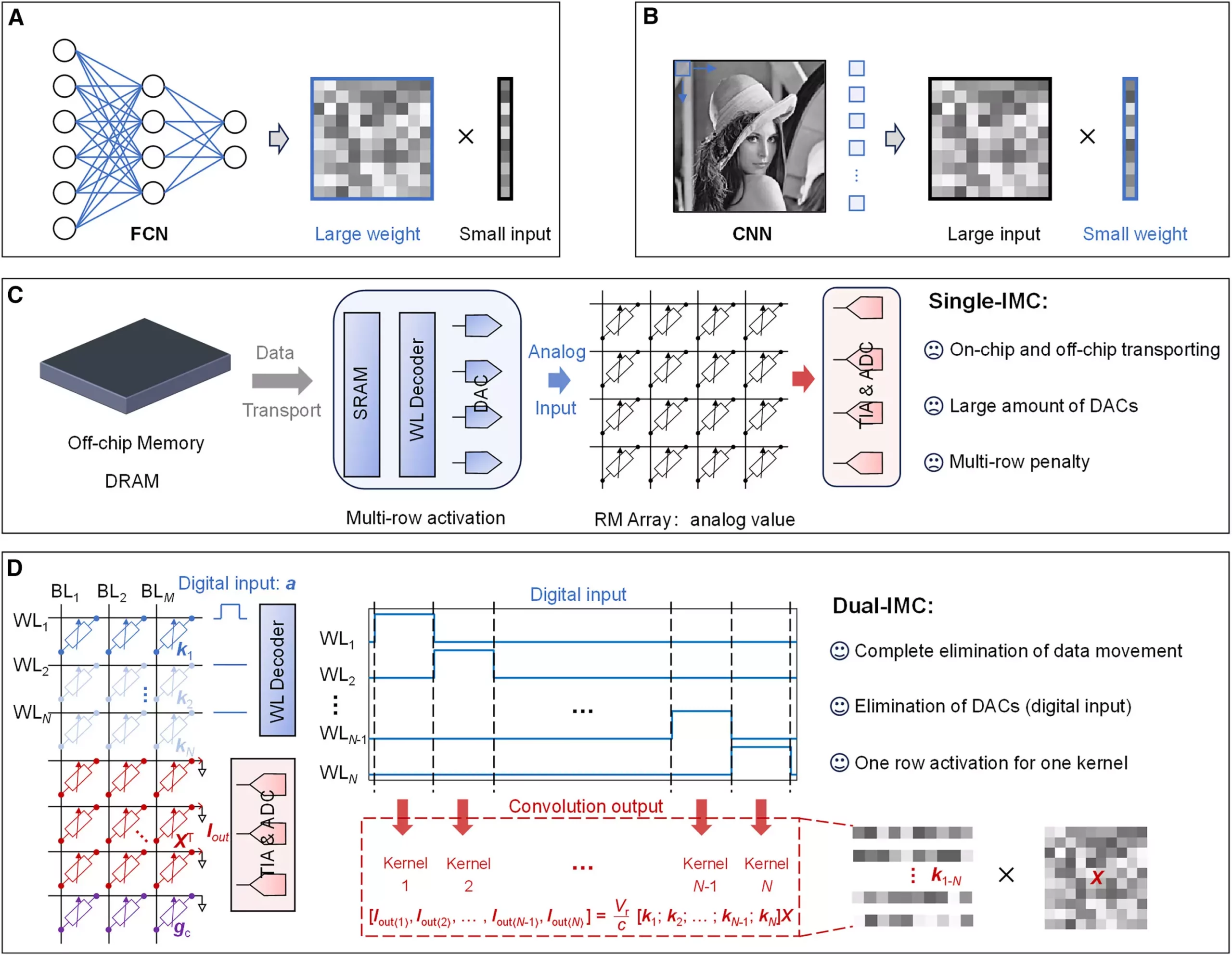

In-memory computing (IMC) represents a paradigm shift in data handling. By performing data operations directly within memory units rather than relying on traditional RAM architectures, the dual-IMC approach reduces the time and energy spent on transferring data back and forth between the memory and processing units. This is crucial for complex operations like matrix-vector multiplication (MVM)—a fundamental technique employed in neural networks.

Neural networks, models that attempt to simulate human cognitive functions, require substantial amounts of data to learn and make predictions. Unfortunately, as these networks grow more complex, the need for rapid data movement becomes acute, leading to inefficiencies largely tied to the limitations of classical von Neumann architectures. The dual-IMC scheme introduces a novel solution by enabling both the weights and inputs of neural networks to be stored within a single memory array. This means that computations can occur locally, mitigating the need for extensive data transportation—thereby increasing the speed and efficiency of the entire process.

The dual-IMC methodology is not merely a theoretical concept; it has been put into practice and has demonstrated significant real-world advantages. By utilizing resistive random-access memory (RRAM) devices, researchers were able to examine the performance of dual-IMC for various applications, including signal recovery and image processing. Consistent findings across experiments indicate several notable benefits:

1. **Enhanced Efficiency:** The fully in-memory nature of computations leads to significantly reduced time and energy expenditure often associated with traditional memory access, such as dynamic random-access memory (DRAM) and static random-access memory (SRAM). This reduction not only accelerates processing but also minimizes the environmental footprint of these operations.

2. **Optimized Performance:** With the major bottleneck of data movement effectively neutralized, the dual-IMC approach has shown to improve overall computing performance. The local handling of data means that the speed of processing is no longer constrained by the slower pace of data transfers.

3. **Cost-Effectiveness:** The elimination of digital-to-analog converters (DACs), which are necessary components in single-IMC schemes, results in lower production costs and reduces the overall complexity of chip designs. This leads to not only savings in terms of monetary expenditure but also a reduction in power requirements and chip area, which can have far-reaching impacts on the manufacturing process.

The advent of the dual-IMC scheme heralds a new era for computing architectures, particularly within the realm of AI. As the demand for efficient data-processing capabilities continues to rise, the insights provided by this research could catalyze significant innovations both in hardware development and in the design of future AI models.

The opportunities that arise from developing more efficient computing frameworks are immense, extending beyond mere performance enhancements. By alleviating the bottlenecks that have historically limited the potential of AI, researchers can investigate new avenues of exploration, paving the way for applications that were once confined to the realms of science fiction. Thus, the work conducted by Professor Zhong’s team is not merely an incremental improvement; it is a potentially transformative approach that could reshape the landscape of artificial intelligence as we know it.

Leave a Reply