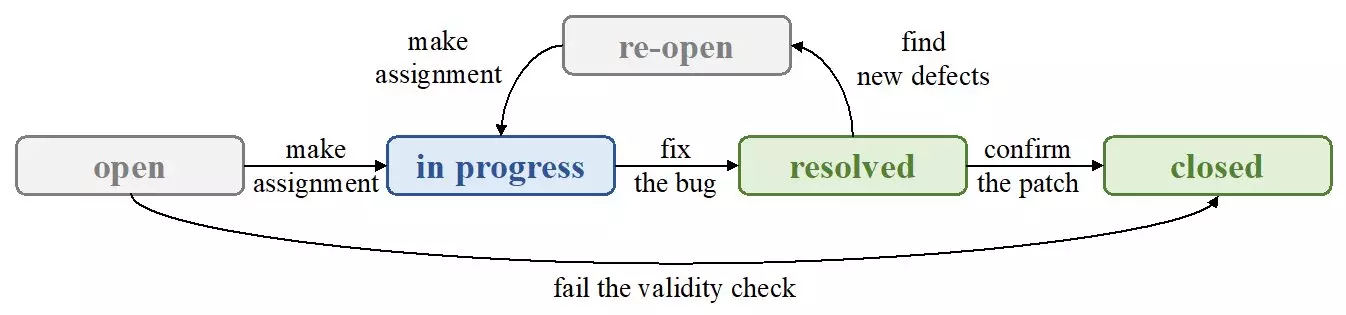

The pursuit of automatic bug assignment has seen significant advancements over the last decade, yet challenges persist, particularly regarding textual bug reports. These reports often contain essential details about software defects, enabling engineers to diagnose and fix issues effectively. However, due to the inherent noise in text—ambiguities, inconsistencies, and various expressions of the same problem—traditional Natural Language Processing (NLP) techniques struggle to produce reliable results. Instead of enhancing accuracy, these textual complications can obscure meaningful insights, making the bug assignment process more arduous for software developers.

The Research Revolutionizing Bug Assignment Techniques

A pivotal study led by Zexuan Li, recently published in Frontiers of Computer Science, sheds light on this dilemma by examining the effectiveness of textual versus nominal features in automatic bug assignment. The research team utilized a modern NLP technique, specifically TextCNN, to explore whether advancements in NLP could yield a significant improvement in analyzing textual features. Contrary to expectations, findings indicated that even with sophisticated methodologies, textual features failed to outperform their nominal counterparts. This points to a critical evaluation of the very foundation upon which bug assignment strategies are built.

Understanding Nominal Features: The Real Game Changer

The study poses and addresses three fundamental questions regarding the functions of these textual and nominal features. Initial inquiries focused on the performance of textual features enhanced by deep learning. Surprisingly, as outlined in Li’s findings, nominal features—essentially indicators reflecting developer preferences—emerged as the most significant contributors to successful bug assignments.

What makes nominal features so impactful? It is their simplicity and direct relevance to developers’ choices that allow for more reliable performance in identifying bugs. These features streamline the classification process, effectively narrowing down the search space for classifiers and enhancing accuracy. By employing the wrapper method and bidirectional strategies, Li’s team demonstrated that a shift in focus towards nominal features could revolutionize the methodology of bug assignment.

Experimental Insights: Bridging Theory and Application

Through rigorous experimentation with various classifiers, including Decision Tree and Support Vector Machine (SVM), the researchers tested five distinct projects with differing scopes and complexities. The results were illuminating; without overly relying on text, nominal features achieved impressive accuracy levels ranging from 11% to 25%. This finding underscores the potential of less convoluted data representations in improving automated systems.

The study also hints at exciting future directions, suggesting that integrating source files to construct a knowledge graph of relationships between key features and corresponding terms could yield even better embeddings. This approach aligns with the growing trend of enhancing NLP frameworks by marrying textual data with structured data techniques.

The Future of Bug Assignment: A Shift in Perspective

As software development continues to evolve, so too must the tools we use to maintain quality and efficiency. The implications of Li’s research call for a paradigm shift—a recognition that while textual bug reports can provide contextual understanding, nominal features may actually unlock greater effectiveness in automatic bug assignment processes. Emphasizing this simple yet powerful approach could ultimately lead to more robust and reliable software development practices, paving the way for innovative solutions in the future.

Leave a Reply