In the evolving landscape of artificial intelligence, particularly in the realm of language models, accuracy and efficiency remain paramount. Researchers continuously strive to enhance the capabilities of large language models (LLMs), ensuring they are not only versatile but also reliable. A recent innovation from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) showcases a novel methodology called Co-LLM, which enables LLMs to collaborate effectively, mimicking the way humans seek assistance from experts.

While LLMs like GPT-3 possess impressive general knowledge, they often struggle in delivering precise answers in specialized domains, be it medicine, mathematics, or complex reasoning tasks. Traditional LLMs can generate text but are limited when it comes to recognizing the boundaries of their knowledge. For instance, if asked about specific medical formulations or complex mathematical equations, these models may confidently provide incorrect information. Historically, enhancing their accuracy has relied on refining the LLMs themselves through extensive training data or intricate mathematical models. However, this approach can often lead to inefficiencies, particularly in resource allocation, since every query requires the same computational effort regardless of its complexity.

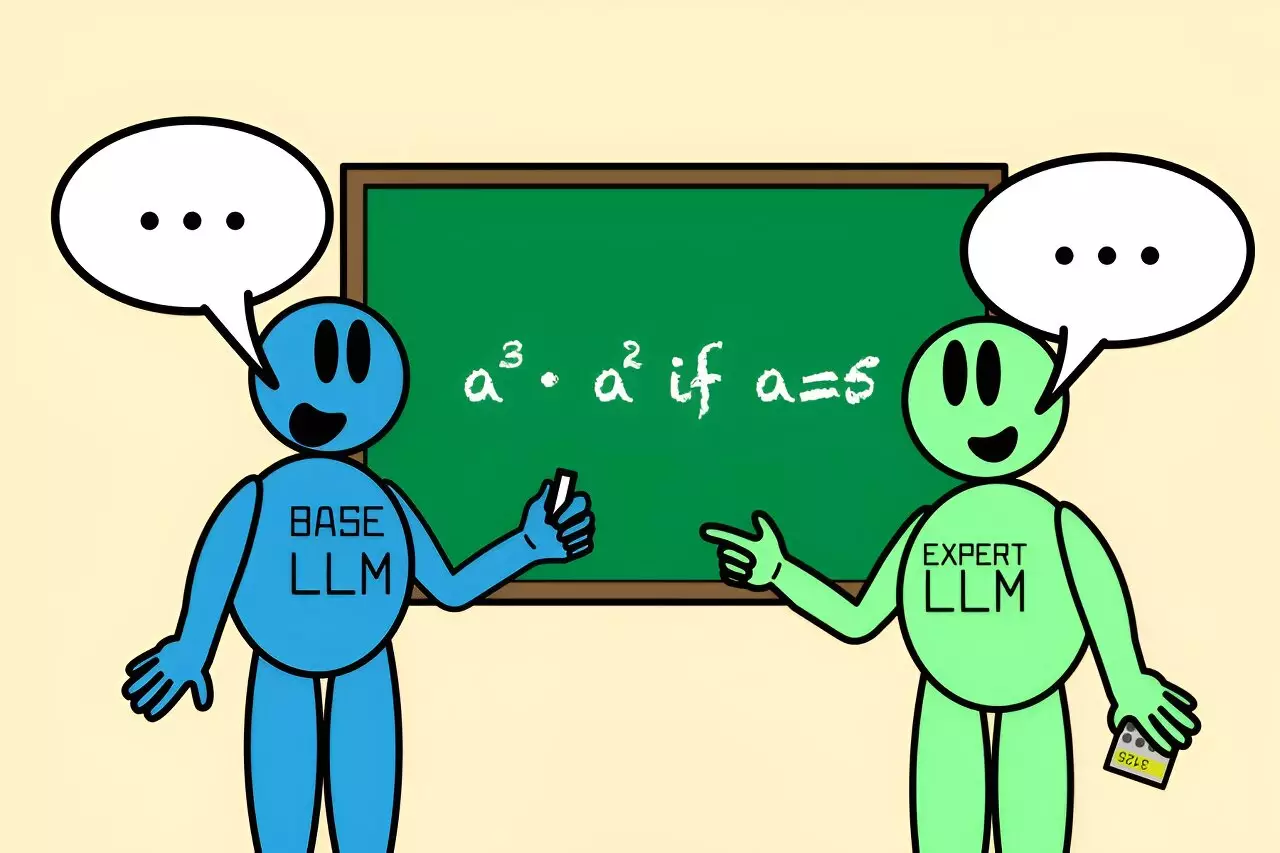

Co-LLM emerges as a transformative approach, introducing a mechanism that fosters collaboration between a general-purpose LLM and a specialized variant. Instead of fundamentally altering the underlying language models, Co-LLM orchestrates their interaction through a system akin to a project manager. This allows the general LLM to initiate a response while assessing its accuracy in real-time. When it encounters uncertainty, the ‘switch variable’—a crucial innovation within Co-LLM—activates. This variable helps determine which tokens of the response would benefit from the expert model’s input, thus seamlessly integrating specialized knowledge into the ongoing dialogue.

Imagine a scenario where a general LLM is tasked with naming extinct bear species. As it constructs its response, Co-LLM’s switch variable intelligently identifies areas lacking sufficient expertise, prompting the model to call for the specialized LLM’s assistance. This combination allows for richer, more accurate responses crafted through a collaborative effort, thereby improving the output quality significantly.

One of the key aspects of Co-LLM’s design is its ability to learn from domain-specific data. The general LLM cannot only generate text but has the capacity to identify areas of weakness in its knowledge base. For example, in tasks requiring biomedical knowledge or solving mathematical expressions, Co-LLM can pinpoint challenging queries and direct them to the specialized models trained specifically for those tasks. This self-aware approach mirrors human behavior, where individuals recognize when to rely on someone with greater expertise.

As Shannon Shen, one of the lead researchers on the project, notes, the methodology is revolutionary in that it allows models to organically learn patterns of collaboration, akin to human cognitive processes of seeking help. By leveraging labeled and domain-specific data, Co-LLM fosters a framework where each model can complement the other, thus optimizing performance.

The performance metrics of Co-LLM paint a compelling picture of its effectiveness. In tests such as solving a mathematical expression, the traditional model led to erroneous calculations, demonstrating a clear need for specialized intervention. With Co-LLM, however, the integration of a mathematical model dramatically improved accuracy, showcasing its superiority over independently operating models.

Furthermore, Co-LLM exhibits efficiency in processing by only activating the specialized LLM when necessary, thus conserving computational resources. This selective engagement, contrasting with other techniques requiring all models to work concurrently, marks a significant advancement in how LLMs can operate alongside one another.

The implications of the Co-LLM system extend beyond mere collaborative functionality. The team envisions enhancing the framework further by incorporating a backward correction mechanism, allowing for reassessment if an expert model fails to provide the correct response. This iterative improvement could dramatically elevate the reliability of AI outputs.

Moreover, continuous updating of the expert model with new information, while exclusively training the base model, would ensure that the data remains relevant and accurate. This has vast potential applications in maintaining up-to-date documents in corporate settings and keeping clinical records accurate in real-time.

Co-LLM represents a significant stride towards fostering collaborative intelligence in AI. By mimicking human teamwork and adaptability, Co-LLM not only improves the accuracy of language responses but also enhances efficiency in processing. As researchers refine this model further, it holds the promise of revolutionizing how AI interacts with domain-specific knowledge, ultimately leading to more informed and accurate outcomes across various industries. The journey of AI collaboration is just beginning, and innovations like Co-LLM will serve as crucial stepping stones in its evolution.

Leave a Reply