In recent years, the capabilities of deep learning have revolutionized various sectors, ranging from healthcare diagnostics to financial predictions. However, the advantages brought by these sophisticated algorithms come at a cost—extensive computational requirements that often necessitate cloud-based solutions. This reliance on cloud infrastructures has raised alarm bells concerning data security, particularly in the healthcare domain, where sensitive patient information is at stake. Let’s delve into how the latest quantum technology innovations are poised to address these inevitable challenges.

The integration of artificial intelligence in cloud computing introduces substantial hurdles, particularly regarding the confidentiality and security of data. In medical settings, the fear of data breaches can inhibit the adoption of AI tools for analyzing confidential patient information. Hospitals may find themselves in a predicament where they have the opportunity to enhance diagnostics but are unable to leverage modern advancements due to concerns around privacy. The balance between harnessing powerful AI models and ensuring patient confidentiality is a significant challenge.

Recent innovations from researchers at MIT offer a promising solution. By developing a security protocol that employs the quantum properties of light, they aim to create a safe channel for transmitting sensitive data between clients and cloud servers engaged in deep learning tasks. This protocol stands to disrupt the conventional understanding of data security in cloud computing.

At the heart of the MIT researchers’ approach is a protocol that encodes data within the laser light utilized in fiber optic communication systems. By tapping into the principles of quantum mechanics, specifically the no-cloning theorem—which asserts that quantum information cannot be perfectly replicated—the researchers are able to secure sensitive data in transit. This technique ensures that any attempt to eavesdrop or intercept data will be detectable, making it a formidable barrier against potential attacks.

Notably, the research team led by postdoc Kfir Sulimany achieved a breakthrough: their new protocol allows for deep-learning model operations while maintaining an impressive accuracy rate of 96%. It highlights a critical evolution in AI technology where security does not come at the expense of performance. According to Sulimany, “Our protocol enables users to leverage these powerful models without compromising the privacy of their data.”

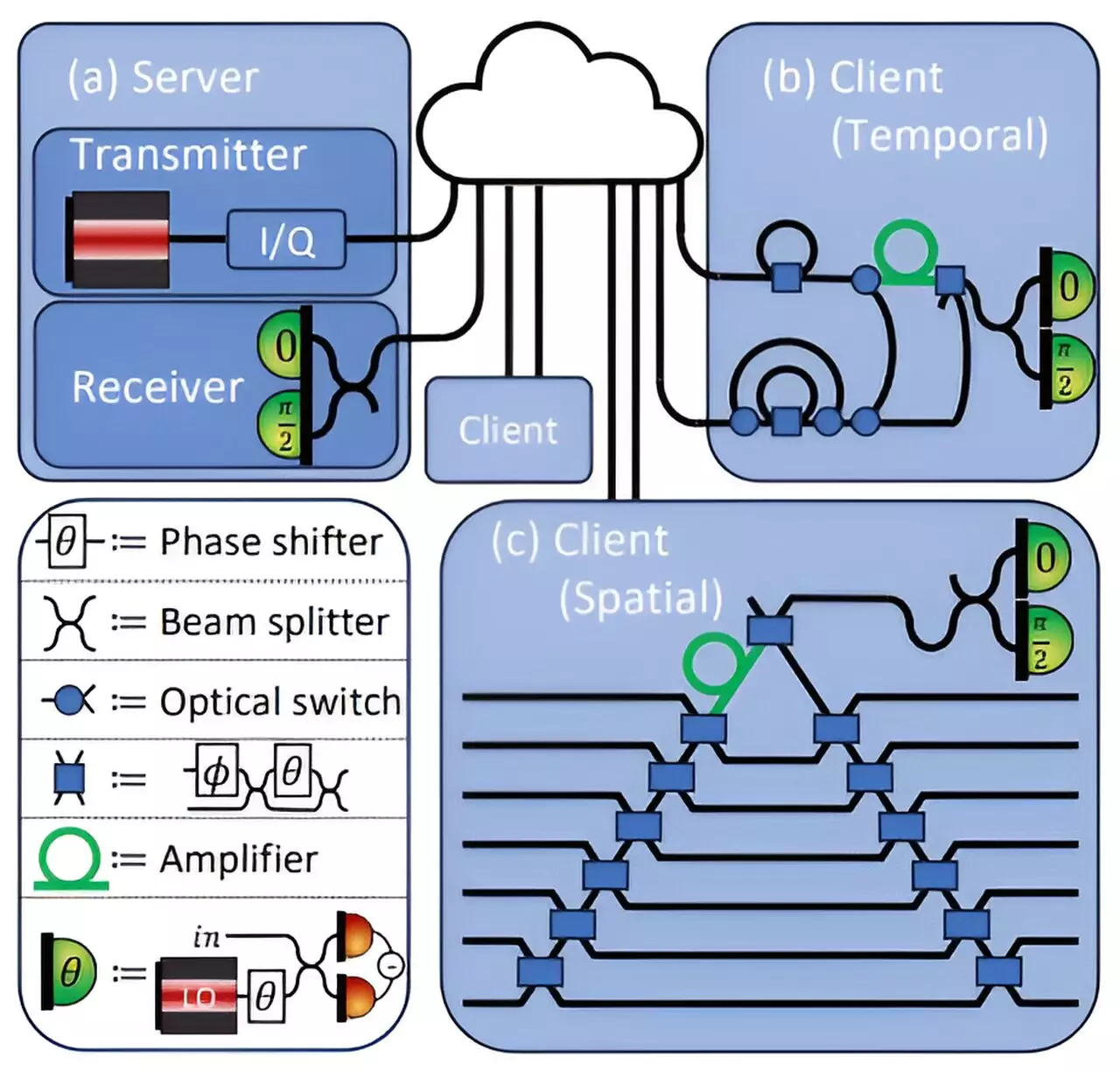

The architecture of the protocol designed at MIT is particularly compelling and revolves around a scenario where a client seeking predictions—such as determining cancer from medical images—must transmit sensitive data to a central server. In traditional settings, this aspect poses significant risks. The current approach cleverly mitigates these risks by ensuring that while the client needs to send data for analysis, neither party will have access to sensitive information post-analysis.

The server encodes deep neural network weights into an optical field, transmitting this encoded data to clients who will then execute their operations to reach conclusions based on their confidential inputs. A unique facet of this protocol is that it only allows the client to derive the outcome needed and prevents them from copying the underlying model details. This stringent control eliminates the risk of model theft while preserving data confidentiality.

One of the more intriguing components of the security protocol is the employment of error measurement. Although the client performs calculations, they inadvertently introduce slight errors due to the quantum nature of the system. When the server receives light reflected from the client, it can analyze these errors to check for any anomalies or potential information leaks. According to the research, this information leakage is minimal and would not provide an adversary with significant insight into either party’s data. These robust security checks effectively seal vulnerabilities in what’s often considered the most flammable component of cloud-based AI.

Looking ahead, the possibilities of applying this security protocol extend beyond mere deep learning tasks. The researchers are eager to explore the implications for federated learning—an approach wherein multiple parties collaborate to improve a central model while maintaining the privacy of their individual datasets. As we transition into an era where data sharing across institutions is imperative for developing comprehensive AI models, the relevance of this technology becomes increasingly significant.

Moreover, the potential expansion into quantum operations signifies a step towards an even more secure computational infrastructure. As stated by Eleni Diamanti, a CNRS research director not involved in the project, this work seamlessly bridges fields that have historically remained distinct, uniting deep learning and quantum cryptography to form a more resilient framework for securing distributed architectures.

The ongoing study and application of quantum security protocols highlight an exciting frontier in the struggle for privacy and security in digital information exchange. As we endeavor to strike a balance between innovation and protection, advancements such as these at MIT mark foundational steps toward a future where technology serves society securely and effectively.

Leave a Reply