The world of art is constantly evolving, with new technologies and advancements pushing creative boundaries. One such advancement is the development of deep learning algorithms and generative models that enable the automated production of AI-generated artistic content. While most AI-generated art is typically created by algorithms, researchers at Universidad Complutense de Madrid and Universidad Carlos III de Madrid have taken a unique approach. They have developed a deep learning-based model that allows a humanoid robot to sketch pictures, similar to how a human artist would.

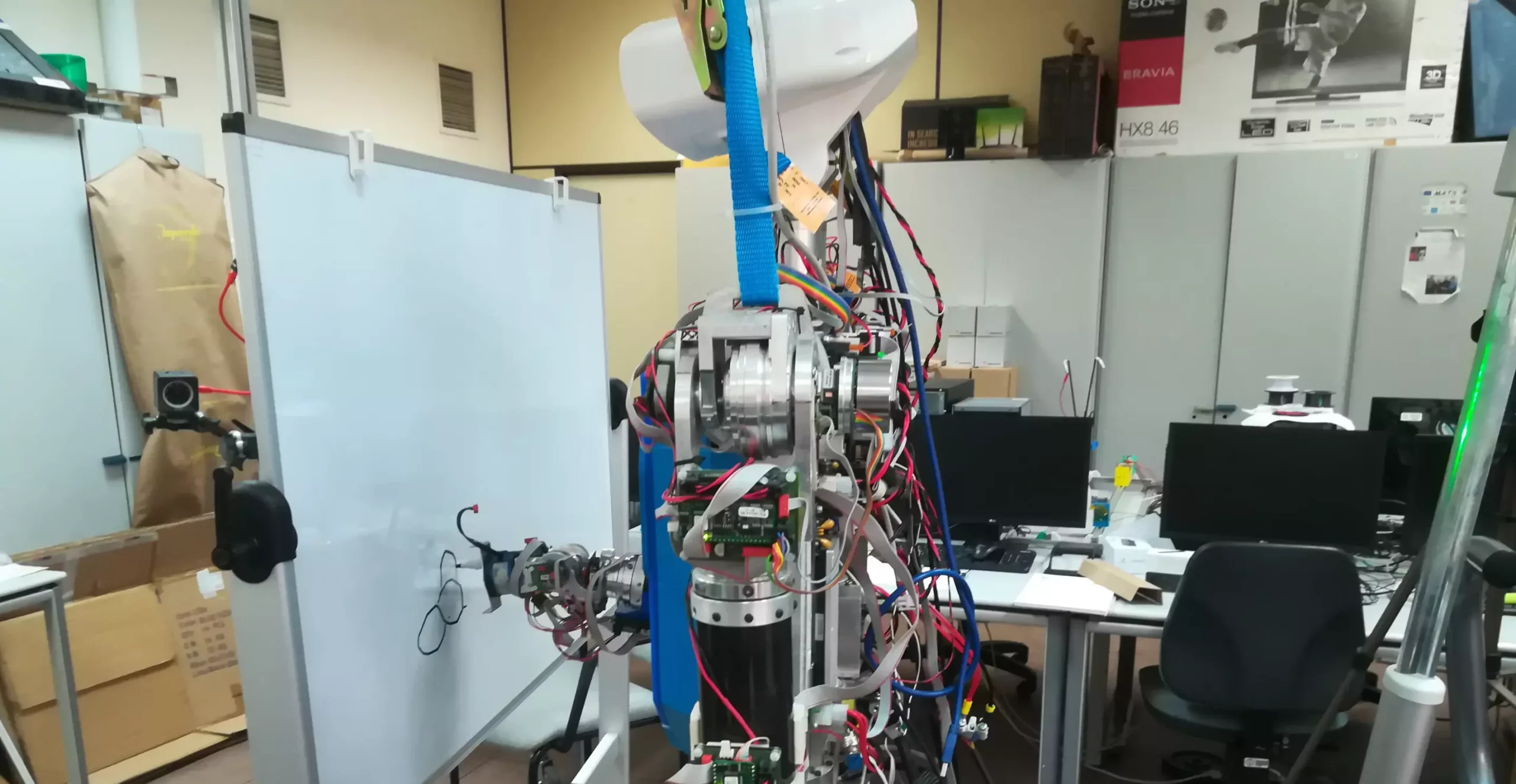

The team of researchers behind this groundbreaking project aimed to create a robot that could actively engage in creative processes. Unlike existing robotic systems that work like printers, reproducing images generated by algorithms, the humanoid robot developed by Fernandez-Fernandez and his colleagues utilizes deep reinforcement learning techniques to create sketches stroke by stroke, mimicking the process of human artists. The goal was not to create complex paintings but rather to focus on improving the robot’s control stage in painting applications.

The researchers built on previous work in devising advanced algorithms to plan the actions of creative robots. Inspired by previous research efforts, they combined different approaches to enhance the robot’s sketching skills. The robotic sketching system is based on a Deep-Q-Learning framework that allows robots to complete complex manual tasks in various environments. By carefully planning the robot’s actions, the researchers were able to create a system that exhibits human-like painting skills.

The neural network behind the robotic sketching system is divided into three interconnected parts: the global network, the local network, and the output network. These networks extract features from the canvas, painting position, and convolutional layers to generate the next painting positions. The addition of two channels providing distance-related and painting tool information further guided the training of the network. Moreover, a pre-training step based on a random stroke generator was introduced to enhance the system’s painting skills.

To translate the distances and positions observed in AI-generated images into physical canvas movements, the researchers devised a strategy using a discretized virtual space within the physical canvas. This approach allowed the robot to directly translate the painting positions provided by the model into real-world actions. The introduction of advanced control algorithms within a real robot painting application is seen as a significant achievement that has the potential to revolutionize the field of art.

The development of the deep learning-based model for robot sketching has paved the way for more research in the field of robot creativity. The researchers hope that their work will inspire further studies and contribute to the introduction of control policies that enable robots to tackle increasingly complex tasks. By utilizing Deep Q-Learning to extract emotions and transfer them to a robot, the researchers have demonstrated the potential for robots to engage in creative tasks that go beyond the scope of classical problems.

The recent advancements in robotic artistry represent a fascinating intersection of technology and creativity. The humanoid robot developed by the researchers showcases the potential for robots to actively engage in creative processes, offering new possibilities for artistic expression. As we continue to explore the capabilities of AI and robotics, the future of art looks brighter and more innovative than ever before.

Leave a Reply