Robotic manipulation is a critical aspect of enabling robots to complete real-world tasks in various environments. To achieve effective object grasping and manipulation, developers have been exploring machine learning models for training robots. While some models have shown success, many of them require extensive pre-training on large datasets, primarily consisting of visual data. However, researchers at Carnegie Mellon University and Olin College of Engineering have recently delved into the potential of using contact microphones as an alternative to traditional tactile sensors for training machine learning models using audio data.

In their study, Mejia, Dean, and their colleagues experimented with pre-training a self-supervised machine learning approach on audio-visual representations from the Audioset dataset, which is a collection of over 2 million 10-second video clips of sounds and music from the internet. By incorporating audio data into the training process, they aimed to enhance the performance of robot manipulation tasks, particularly in scenarios where objects and locations differ from the training data.

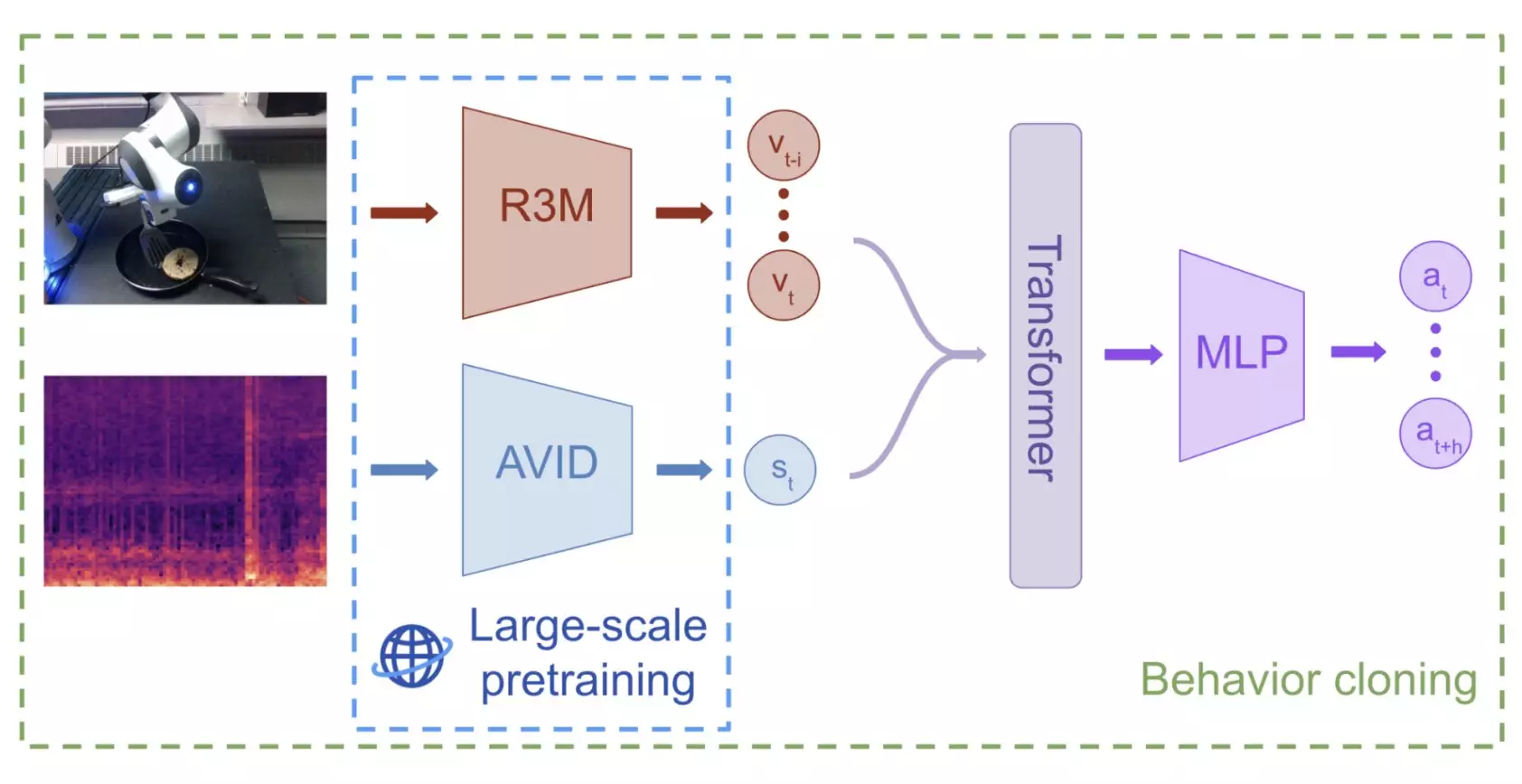

The researchers discovered that leveraging contact microphones to capture audio-based information proved to be a valuable strategy for large-scale audio-visual pre-training. The model they developed, known as audio-visual instance discrimination (AVID), demonstrated superior performance in robot manipulation tasks compared to models solely reliant on visual data. This breakthrough indicated the potential of using multi-sensory pre-training to advance the capabilities of robots in real-world environments.

Looking ahead, Mejia, Dean, and their team envision a future where robots can be equipped with pre-trained multimodal machine learning models to enhance their manipulation skills. The proposed approach opens up new possibilities for further refinement and testing on a broader range of real-world tasks. In their study, the researchers highlighted the importance of investigating the most conducive properties of pre-training datasets for learning audio-visual representations in manipulation policies, paving the way for future advancements in robotic technology.

The integration of audio data as part of the training process for machine learning models in robotics represents a significant step forward in enhancing the capabilities of robots to manipulate objects effectively. By exploring innovative approaches, such as leveraging contact microphones and audio-visual pre-training, researchers are unlocking new avenues for the development of skilled robot manipulation techniques. As technology continues to evolve, the fusion of audio data with traditional visual inputs could revolutionize the field of robotics, enabling robots to perform complex tasks with greater precision and efficiency.

Leave a Reply