On 1 August, the European Union initiated a significant legal development with the implementation of its new AI Act. This comprehensive piece of legislation is designed to regulate the deployment of artificial intelligence technologies throughout the EU. The versatile nature of artificial intelligence means it holds vast potential benefits, yet it can also present significant hazards, particularly in sensitive areas such as health care and employment. A collaborative effort between experts in computer science and law, led by Holger Hermanns from Saarland University and Anne Lauber-Rönsberg from the Dresden University of Technology, has scrutinized the practical implications of the AI Act for programmers. Their critical findings will be shared this autumn, offering insights into how the act will affect the day-to-day operations of those behind AI systems.

Despite the law’s importance, there exists a clear disconnect between the legal text and the working programmer. Hermanns acknowledges a pivotal question that resonates across the programming community: “What do I actually need to know about this law?” With the AI Act encompassing a substantial 144 pages, many developers find it impractical to delve into the minutiae of the legislation. This gap in understanding necessitates precise interpretations that can distill the essence of the law into actionable insights for software developers.

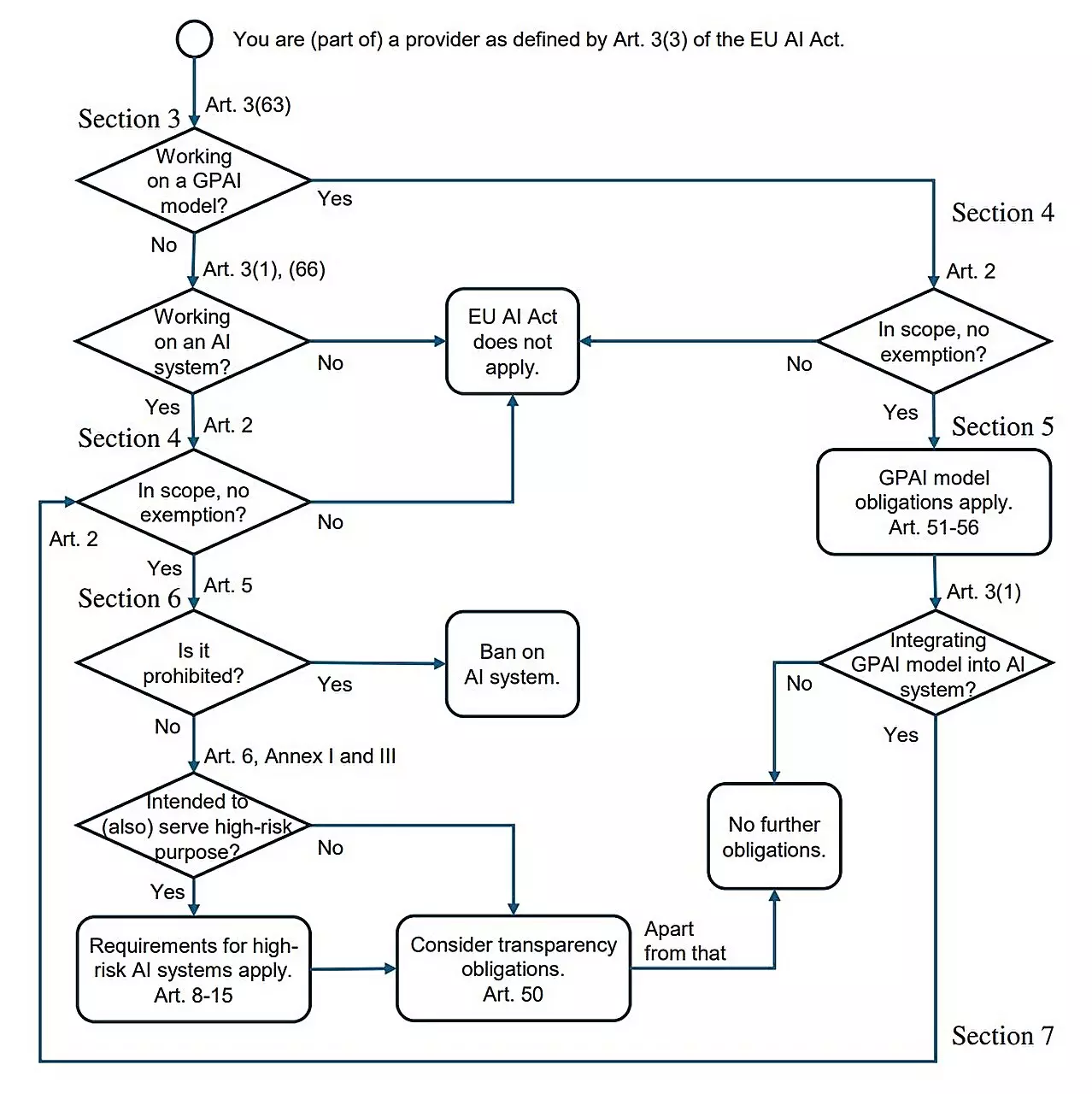

To address these concerns, a research paper titled “AI Act for the Working Programmer” has been produced. Co-authored by Hermanns, doctoral student Sarah Sterz, postdoctoral researcher Hanwei Zhang, and law professor Lauber-Rönsberg, this document seeks to clarify the implications of the AI Act for those working directly in technology and programming. The findings suggest that while the law imposes certain constraints, the day-to-day experiences of most programmers will largely remain unchanged.

While the bulk of AI applications may face minimal disruption, the AI Act is particularly focused on high-risk systems. This includes applications that could significantly impact a person’s life or wellbeing, such as those used for screening job applicants or determining creditworthiness. For instance, software developed to analyze job applications will come under the stringent requirements of the AI Act once it goes to market. Conversely, a video game’s opponent-simulation AI will escape regulatory scrutiny because it does not encroach on sensitive issues or pose identifiable risks to users.

Hermanns emphasizes the complexity involved in developing high-risk AI systems. Not only must developers ensure that the training datasets are adequate and representative, but they must also avoid biases that could disadvantage certain groups of applicants. Such systems will also be required to create and maintain thorough records, akin to the black box recorders used in aviation, which facilitate the reconstruction of events if necessary. Furthermore, additional documentation is mandated to ensure that users can understand and manage the AI systems effectively during practical applications.

The AI Act serves as an essential framework to safeguard users from potential discrimination, harm, or injustice stemming from artificial intelligence systems. Hermanns articulates that while the implementation of the Act entails new restrictions, many common applications—like video games or spam filters—will not be significantly affected. The law primarily activates regulatory measures surrounding systems that are high-risk or anticipated to present challenges upon deployment.

Importantly, the AI Act does not obstruct research and development initiatives, which can continue unabated, revealing a calculated balance between fostering innovation and ensuring safety. This approach poses minimal risk of Europe falling behind other global leaders in artificial intelligence. In fact, many experts, including Hermanns, express optimism about the AI Act as a crucial step towards systematic regulation of AI across a continent.

The European Union’s AI Act marks a pivotal chapter in the governance of artificial intelligence, addressing potential risks while allowing for continued innovation. The insights from industry experts underline the necessity for programmers to understand the implications of this legislation without being overwhelmed by its complexities. The dual focus on high-risk systems, alongside an ethos of responsible development, establishes an ethical foundation upon which future AI technologies can safely evolve. As discussions around human oversight and best practices for integration develop in parallel, the AI Act lays the groundwork for a balanced approach to artificial intelligence—a vehicle for advancement that champions both safety and growth in the tech industry.

Leave a Reply