The field of speech emotion recognition (SER) has made remarkable strides in recent years, thanks to advancements in deep learning technologies. These systems can interpret and analyze the emotional tone in spoken language, with applications ranging from customer service to mental health assessment. However, the rise of deep learning also brings unforeseen challenges, particularly in the form of adversarial attacks. These attacks aim to exploit the weaknesses inherent in machine learning models, potentially causing significant errors in emotional interpretation.

A recent study conducted by a research team at the University of Milan sheds light on the vulnerability of SER systems to adversarial attacks. Published on May 27 in “Intelligent Computing,” the research meticulously assessed the effects of both white-box and black-box adversarial attacks across various languages and genders. The researchers focused specifically on convolutional neural network long short-term memory (CNN-LSTM) models, which are designed to enhance the efficacy of speech emotion recognition.

The findings established that SER models are highly susceptible to adversarial examples—carefully constructed inputs that mislead the model into producing incorrect predictions. This vulnerability could lead to dire consequences in applications where accurate emotional detection is crucial. The implications of this research extend beyond theoretical discourse; they raise vital concerns regarding the robustness and reliability of systems that rely on emotional cues derived from speech.

The research utilized a variety of adversarial attack techniques to evaluate the effectiveness of both white-box and black-box methodologies. The white-box attacks included approaches such as the Fast Gradient Sign Method (FGSM), Basic Iterative Method, DeepFool, and Jacobian-based Saliency Map Attacks, along with Carlini and Wagner’s method. In contrasting, the black-box methods employed were the One-Pixel Attack and the Boundary Attack. Notably, the Boundary Attack demonstrated significant efficacy, achieving compelling results even with limited access to the models’ internal mechanisms.

Interestingly, the study revealed that black-box attacks could outperform their white-box counterparts under certain conditions, achieving superior results despite the lack of insight into the model’s structural framework. This finding is particularly concerning as it suggests that potential attackers could exploit SER systems more effectively without needing to understand their inner workings—thus increasing the threat landscape faced by these technologies.

The research took an insightful step by including a gender-based analysis of how different adversarial attacks impact male and female speech, as well as variations across languages. The results indicated that while English speakers exhibited the highest susceptibility to attacks, Italian speakers demonstrated greater resilience. Moreover, male samples showed slight advantages in terms of reduced accuracy and perturbation during white-box attacks. Nonetheless, the differences between male and female samples were minor, emphasizing that the vulnerability to adversarial attacks is not heavily gendered.

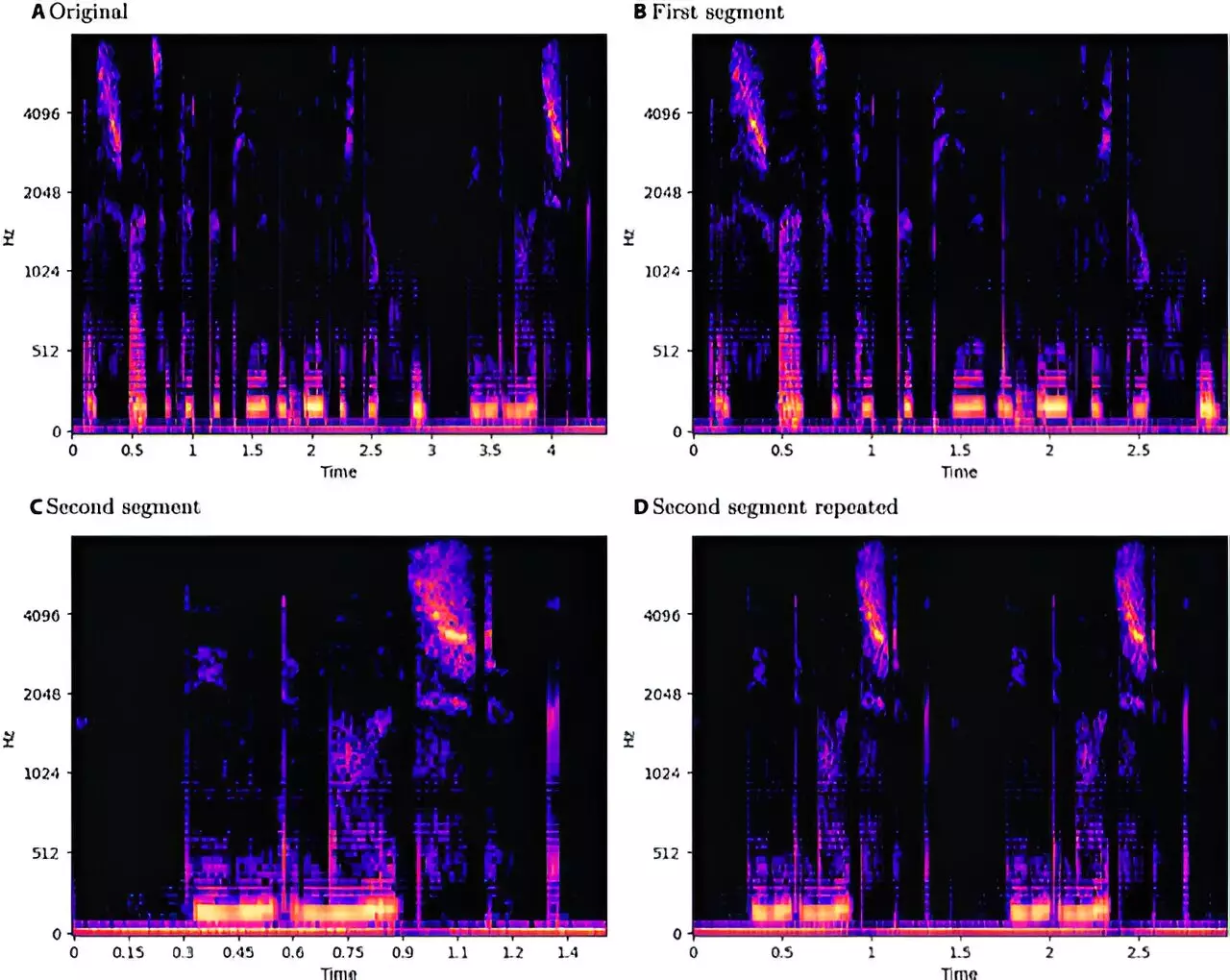

The methodology underlying this research employed a systematic approach to standardize and preprocess audio samples from three datasets—EmoDB, EMOVO, and RAVDESS—maximizing coherence and ensuring effective feature extraction. The inclusion of techniques such as pitch shifting and time stretching allowed the researchers to augment datasets while retaining a meaningful maximum sample duration.

One of the critical discussions arising from this research pertains to the balance between transparency and security in the disclosure of vulnerabilities. While revealing specific weaknesses in SER models could provide a roadmap for potential attackers, withholding such information could be counterproductive. The researchers advocate for openness in communicating these vulnerabilities so that both developers and potential adversaries can understand the realm of risks involved. This awareness is crucial for fortifying systems against attacks and fostering a more secure technological environment.

As speech emotion recognition technology continues to evolve, the challenges posed by adversarial attacks necessitate urgent attention. By illuminating these vulnerabilities, research efforts not only highlight the need for improved defenses but also contribute to the ongoing dialogue surrounding the ethical and practical ramifications of using deep learning in emotional analysis. Strengthening SER systems against adversarial threats is paramount, ensuring that these powerful tools can be trusted in sensitive applications where understanding emotion is essential. Through concerted research and collaboration, it is possible to enhance the robustness of these systems and secure their roles in our increasingly digital interactions.

Leave a Reply