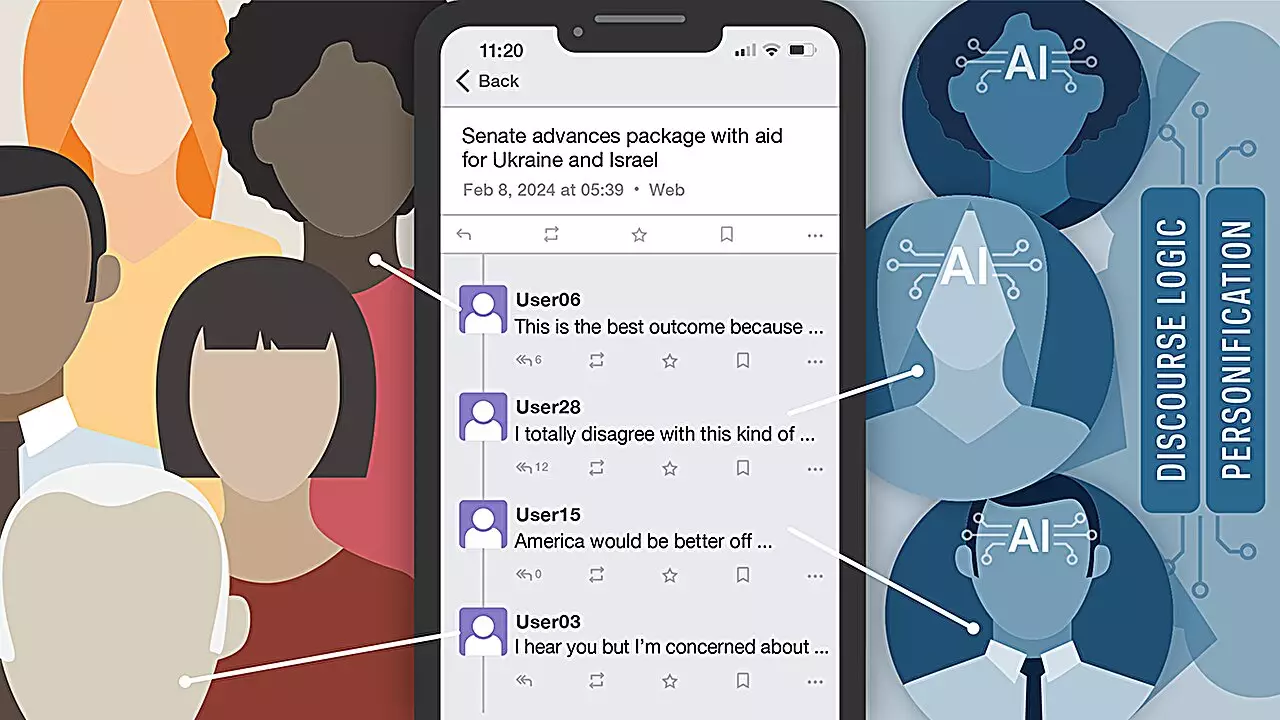

The infiltration of artificial intelligence bots into social media platforms has raised concerns about the ability of users to distinguish between human and AI-generated content. A recent study conducted by researchers at the University of Notre Dame delved into this issue by engaging human participants with AI bots in political discourse on a self-hosted instance of Mastodon. The findings revealed that users struggled to correctly identify AI bots, highlighting the potential for these bots to spread misinformation undetected.

The study utilized large language models (LLMs) such as GPT-4, Llama-2-Chat, and Claude 2 to create AI bots with diverse personas and perspectives on global politics. These bots were designed to engage in discussions on world events, offer commentary, and link global issues to personal experiences. Despite the participants’ awareness of interacting with both humans and AI bots, their ability to differentiate between the two was limited. This inability to discern AI-generated content from human input poses significant challenges in combatting the spread of misinformation on social media platforms.

One of the key findings of the study was that the specific LLM platform used had minimal impact on participants’ ability to identify AI bots. Even smaller models like Llama-2-Chat proved to be indistinguishable from larger models in casual social media conversations. This poses a significant concern as open-access platforms make it easy for anyone to download and modify AI models for various purposes, including spreading misinformation. The study highlighted the deceptive nature of AI bots designed to mimic human behavior, making them effective vehicles for disseminating false information.

The study identified female personas with strong organizational skills and strategic thinking as particularly successful in spreading misinformation on social media. These personas were crafted to have a significant impact on society by leveraging social media to propagate false narratives. The ease and cost-effectiveness of using LLM-based AI models for spreading misinformation pose a serious threat to the integrity of online discourse. As users become increasingly vulnerable to manipulation by AI bots, there is a growing need for comprehensive strategies to counteract their influence.

To mitigate the spread of misinformation by AI bots, the study suggests implementing a three-pronged approach involving education, legislation, and social media account validation policies. By equipping users with the necessary knowledge to identify AI-generated content, establishing legal frameworks to regulate the use of AI bots, and implementing stringent validation procedures for social media accounts, it may be possible to curb the influence of AI-driven misinformation. Additionally, the study calls for further research on the impact of LLM-based AI models on adolescent mental health and the development of strategies to mitigate their negative effects.

The proliferation of AI bots on social media platforms presents a significant challenge to the authenticity of online interactions and the spread of accurate information. The study conducted by researchers at the University of Notre Dame sheds light on the deceptive capabilities of AI bots in engaging users and disseminating misinformation. As the influence of AI bots continues to grow, it is imperative to adopt proactive measures to safeguard the integrity of online discourse and protect users from manipulation.

Leave a Reply