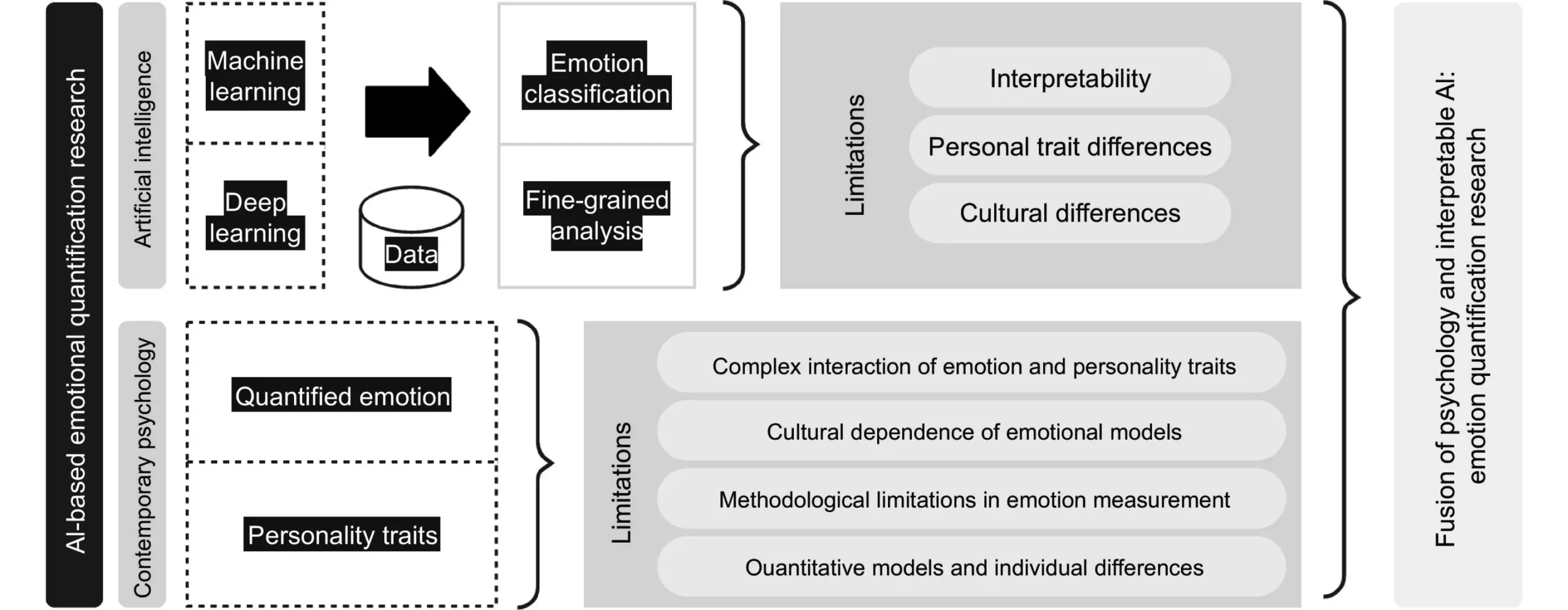

Human emotions are intricate and often defy simple classification. The challenge in accurately gauging one’s emotional state is magnified in the context of artificial intelligence (AI). Nonetheless, recent advancements suggest that by merging traditional psychological techniques with modern AI capabilities, researchers are on the brink of a revolution in emotion quantification.

Understanding the Complexity of Human Emotion

Emotions are multifaceted and subjective, making them elusive to define or measure. Conventional methods typically rely on self-reporting or behavioral observations, which can be fraught with biases and inaccuracies. In a world where understanding mental states is increasingly important—especially given the rising emphasis on mental health—scientists have recognized the urgent need for better methodologies. This intersection of psychology and technology points to a future where emotional insight is not only more accurate but also more accessible.

The Role of AI in Emotion Recognition

AI has made substantial strides in analyzing various human behaviors and responses, providing new avenues for interpreting emotional states. Techniques such as facial emotion recognition (FER) and gesture analysis have emerged, allowing machines to learn from visual cues. These methods are pivotal because they can offer insights into emotions that may not be effectively communicated through words alone. By leveraging machine learning algorithms, AI systems can identify subtle patterns and correlations that the human eye might overlook.

Consider, for instance, the combination of FER with physiological data. Technological advancements now permit the integration of electroencephalogram (EEG) data alongside visual inputs. This multi-faceted approach can yield a more comprehensive understanding of emotional arousal, including reactions such as changes in heart rate or skin conductivity. By synthesizing various forms of data—both visual and physiological—researchers can build a more nuanced model of human emotion that reflects its inherent complexities.

Multi-Modal Emotion Recognition and Its Implications

Multi-modal emotion recognition serves as a cutting-edge strategy in emotional quantification. By fusing inputs from multiple sensory channels—such as sight, sound, and tactile feedback—AI systems can create a holistic picture of an individual’s emotional landscape. The implications of this technology are far-reaching. Imagine AI systems within healthcare that can monitor patients’ emotional states in real-time, providing clinicians with crucial data that informs treatment plans and patient care strategies. This could lead to highly personalized interventions that are responsive to the emotional needs of patients.

Feng Liu, a leading researcher in this domain, emphasizes that interdisciplinary collaboration is essential to fully unlocking the potential of emotion quantification technologies. Engagement between specialists in AI, psychology, and psychiatry will facilitate the development of comprehensive solutions that address emotional complexity across different environments.

Despite the promising potential of emotion recognition technologies, several hurdles remain. One critical concern is ensuring the safety and transparency of the systems used. The sensitive nature of emotion quantification necessitates rigorous data handling and privacy measures. As these AI technologies will often deal with intimate personal data, ethical guidelines and regulatory frameworks must be established to protect user information and prevent misuse.

Moreover, in an increasingly globalized world, cultural nuances play a vital role in emotional expression. A one-size-fits-all approach to emotion quantification may lead to misunderstandings or inaccuracies, making it crucial for AI systems to be culturally aware. Tailoring algorithms to accommodate different cultural expressions of emotion will not only enhance reliability but also improve the effectiveness of these systems in diverse settings.

The future of emotion quantification lies in embracing the richness of human complexity through a collaborative and interdisciplinary approach. By integrating established psychological frameworks with the capabilities of AI, new horizons may open in mental health monitoring, personalized customer experiences, and beyond. As researchers continue to navigate the delicate balance between technology and empathy, it becomes increasingly clear that advancements in emotion recognition technology hold the promise of transforming our understanding of human feelings, ultimately fostering more meaningful human-computer interactions and enhancing individual well-being on a broader scale.

Leave a Reply